Loading...

Searching...

No Matches

mont.c File Reference

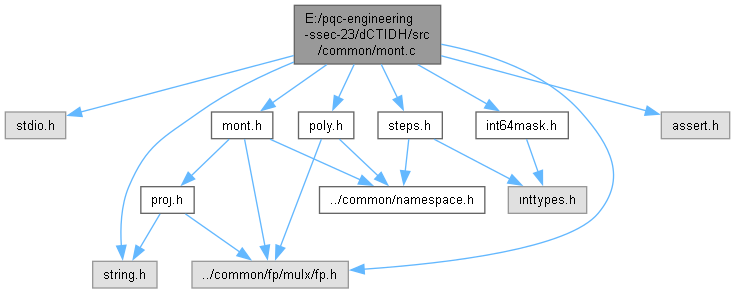

#include <stdio.h>#include <string.h>#include <assert.h>#include "steps.h"#include "mont.h"#include "../common/fp/mulx/fp.h"#include "poly.h"#include "int64mask.h"

Include dependency graph for mont.c:

Go to the source code of this file.

Functions | |

| void | xA24 (proj *A24, const proj *A) |

| void | xDBLADD (proj *R, proj *S, proj const *P, proj const *Q, proj const *PQ, proj const *A24, int Aaffine) |

| void | xDBL (proj *Q, proj const *P, const proj *A24, int Aaffine) |

| void | xADD (proj *S, proj const *P, proj const *Q, proj const *PQ) |

| void | xMUL_dac (proj *Q, proj const *A24, int Aaffine, proj const *P, int64_t dac, int64_t daclen, int64_t maxdaclen) |

| void | xMUL (proj *Q, proj const *A, int Aaffine, proj const *P, uintbig const *k, int64_t kbits) |

| void | xMUL_vartime (proj *Q, proj const *A, int Aaffine, proj const *P, uintbig const *k) |

| void | xISOG_matryoshka (proj *A, proj *P, int64_t Plen, proj const *K, int64_t k, int64_t klower, int64_t kupper) |

| void | xISOG (proj *A, proj *P, int64_t Plen, proj const *K, int64_t k) |

| void | xISOG_old (proj *A, proj *P, proj const *K, int64_t k) |

Function Documentation

◆ xA24()

◆ xADD()

◆ xDBL()

◆ xDBLADD()

| void xDBLADD | ( | proj * | R, |

| proj * | S, | ||

| proj const * | P, | ||

| proj const * | Q, | ||

| proj const * | PQ, | ||

| proj const * | A24, | ||

| int | Aaffine | ||

| ) |

◆ xISOG()

◆ xISOG_matryoshka()

| void xISOG_matryoshka | ( | proj * | A, |

| proj * | P, | ||

| int64_t | Plen, | ||

| proj const * | K, | ||

| int64_t | k, | ||

| int64_t | klower, | ||

| int64_t | kupper | ||

| ) |

Definition at line 429 of file mont.c.

430{

434

438

441 {

442 sqrtvelu = 1;

446 }

447

449

452

453 // A -> (A : C)

454 // Aed.z = 2C

456

457 // Aed.x = A + 2C

459

460 // A24.x = A + 2C

462

463 // A24.z = 4C

465

466 // Aed.z = A - 2C

468

470#ifndef NDEBUG

472

475

476 Minit[1] = 1;

477#endif

480#ifndef NDEBUG

481 Minit[2] = 1;

482#endif

483

485 {

487 {

489 {

493 {

495 {

499#ifndef NDEBUG

501#endif

502 continue;

503 }

508#ifndef NDEBUG

510#endif

511 continue;

512 }

513 }

514 else

515 {

519 {

521 {

524#ifndef NDEBUG

526#endif

527 continue;

528 }

533#ifndef NDEBUG

535#endif

536 continue;

537 }

538 }

539

541 {

543 {

548#ifndef NDEBUG

550#endif

551 continue;

552 }

554 {

557#ifndef NDEBUG

559#endif

560 continue;

561 }

563 {

567#ifndef NDEBUG

569#endif

570 continue;

571 }

573 {

577 {

582#ifndef NDEBUG

584#endif

585 continue;

586 }

587 }

588 }

589 }

590 }

591 else

592 {

594 {

595#ifndef NDEBUG

597#endif

599 }

600 }

601

608 } else {

609 fixPlen = 1;

610 }

613 {

616 }

619 {

620 //precomputations

621 // ( X + Z )

623 // ( X - Z )

625 }

626

628 {

631

633 {

637 }

638

640

644

646 {

650 }

653

657

659

660 poly_multieval_postcompute(v, bs, (const fp *)T1, 2 * gs + 1, (const fp *)TI, (const fp *)TI + 2 * bs, (const fp *)precomp);

664

665 poly_multieval_postcompute(v, bs, (const fp *)Tminus1, 2 * gs + 1, (const fp *)TI, (const fp *)TI + 2 * bs, (const fp *)precomp);

669

671 {

674

679

683

684 poly_multieval_postcompute(v, bs, (const fp *)TP, 2 * gs + 1, (const fp *)TI, (const fp *)TI + 2 * bs, (const fp *)precomp);

688

689 poly_multieval_postcompute(v, bs, (const fp *)TPinv, 2 * gs + 1, (const fp *)TI, (const fp *)TI + 2 * bs, (const fp *)precomp);

693 }

694

697 {

707 {

716 }

717 }

718 }

719 else

720 {

721 // no sqrtvelu

723

729

731 {

736 }

737

739 {

741 // 2 < i < (k-1)/2

750 {

751 // point evaluation

760 }

761 }

762 }

763

765 {

766 // point evaluation

767 // [∏( X − Z )( X i + Z i ) + ( X + Z )( X i − Z i )]^2

770

771 // X' = X * Qbatch[h].x

772 // X' = X * [∏( X − Z )( X i + Z i ) + ( X + Z )( X i − Z i )]^2

775 }

776

777 // Aed.x = Aed.x^k * Abatch.z^8

779 // Aed.z = Aed.z^k * Abatch.x^8

781

782 //compute Montgomery params

783 // ( A' : C' ) = ( 2 ( a'_E + d'_E ) : a'_E − d'_E )

786 fp_double1(&A->x);

787}

assert(var1 eq var2)

References assert(), fp_1, fp_copy, i, j, poly_multieval_postcompute, poly_multieval_precompute, poly_multieval_precomputesize, poly_multiprod2, poly_multiprod2_selfreciprocal, poly_tree1, poly_tree1size, steps, xADD, and xDBL.

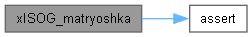

Here is the call graph for this function:

◆ xISOG_old()

Definition at line 794 of file mont.c.

795{

798

801

802 fp_add3(&Aed.z, (const fp *)&A->z, (const fp *)&A->z); //compute twisted Edwards curve coefficients

805

808

811

816

820

824 {

826 }

827

829 {

840 }

841

842 // point evaluation

843 fp_sq1(&Q.x);

844 fp_sq1(&Q.z);

847

848 //compute Aed.x^k, Aed.z^k

850

851 //compute prod.x^8, prod.z^8

852 fp_sq1(&prod.x);

853 fp_sq1(&prod.x);

854 fp_sq1(&prod.x);

855 fp_sq1(&prod.z);

856 fp_sq1(&prod.z);

857 fp_sq1(&prod.z);

858

859 //compute image curve parameters

862

863 //compute Montgomery params

867}

References assert(), i, xA24, xADD, and xDBL.

Here is the call graph for this function:

◆ xMUL()

| void xMUL | ( | proj * | Q, |

| proj const * | A, | ||

| int | Aaffine, | ||

| proj const * | P, | ||

| uintbig const * | k, | ||

| int64_t | kbits | ||

| ) |

◆ xMUL_dac()

| void xMUL_dac | ( | proj * | Q, |

| proj const * | A24, | ||

| int | Aaffine, | ||

| proj const * | P, | ||

| int64_t | dac, | ||

| int64_t | daclen, | ||

| int64_t | maxdaclen | ||

| ) |

Definition at line 110 of file mont.c.

111{

118 // int64_t collision = fp_iszero(&Pinput.z);

120

121 for (;;)

122 {

126 break;

127

128 // invariant: P1+P2 = P3

129 // odd dac: want to replace P1,P2,P3 with P1,P3,P1+P3

130 // even dac: want to replace P1,P2,P3 with P2,P3,P2+P3

131

133 // invariant: P1+P2 = P3

134 // all cases: want to replace P1,P2,P3 with P1,P3,P1+P3

135

136 // collision |= want&fp_iszero(&P2.z);

138

143

144 maxdaclen -= 1;

145 daclen -= 1;

146 dac >>= 1;

147 }

148

150 // in case of collision, input has the right order

151}