Variables | |

| tuple | NETRC_FILES = (".netrc", "_netrc") |

| DEFAULT_CA_BUNDLE_PATH = certs.where() | |

| dict | DEFAULT_PORTS = {"http": 80, "https": 443} |

| str | DEFAULT_ACCEPT_ENCODING |

| UNRESERVED_SET | |

| str | _null = "\x00".encode("ascii") |

| str | _null2 = _null * 2 |

| str | _null3 = _null * 3 |

Detailed Description

requests.utils ~~~~~~~~~~~~~~ This module provides utility functions that are used within Requests that are also useful for external consumption.

Function Documentation

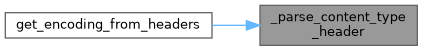

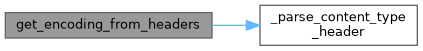

◆ _parse_content_type_header()

|

protected |

Returns content type and parameters from given header

:param header: string

:return: tuple containing content type and dictionary of

parameters

Definition at line 513 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.get_encoding_from_headers().

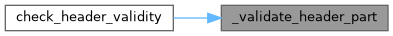

◆ _validate_header_part()

|

protected |

Definition at line 1043 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.check_header_validity().

◆ add_dict_to_cookiejar()

| add_dict_to_cookiejar | ( | cj, | |

| cookie_dict | |||

| ) |

Returns a CookieJar from a key/value dictionary. :param cj: CookieJar to insert cookies into. :param cookie_dict: Dict of key/values to insert into CookieJar. :rtype: CookieJar

Definition at line 477 of file utils.py.

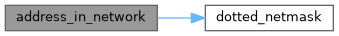

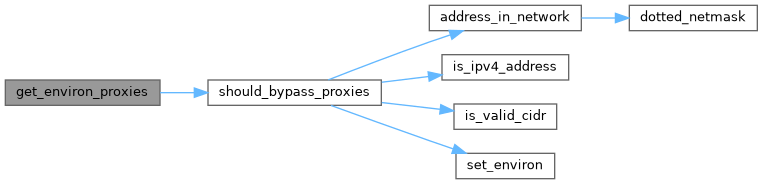

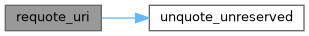

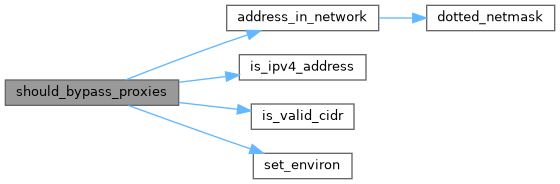

◆ address_in_network()

| address_in_network | ( | ip, | |

| net | |||

| ) |

This function allows you to check if an IP belongs to a network subnet

Example: returns True if ip = 192.168.1.1 and net = 192.168.1.0/24

returns False if ip = 192.168.1.1 and net = 192.168.100.0/24

:rtype: bool

Definition at line 681 of file utils.py.

References pip._vendor.requests.utils.dotted_netmask(), and i.

Referenced by pip._vendor.requests.utils.should_bypass_proxies().

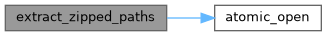

◆ atomic_open()

| atomic_open | ( | filename | ) |

Write a file to the disk in an atomic fashion

Definition at line 301 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.extract_zipped_paths().

◆ check_header_validity()

| check_header_validity | ( | header | ) |

Verifies that header parts don't contain leading whitespace reserved characters, or return characters. :param header: tuple, in the format (name, value).

Definition at line 1032 of file utils.py.

References pip._vendor.requests.utils._validate_header_part().

◆ default_headers()

| default_headers | ( | ) |

:rtype: requests.structures.CaseInsensitiveDict

Definition at line 898 of file utils.py.

References pip._vendor.requests.utils.default_user_agent().

◆ default_user_agent()

| default_user_agent | ( | name = "python-requests" | ) |

Return a string representing the default user agent. :rtype: str

Definition at line 889 of file utils.py.

Referenced by pip._vendor.requests.utils.default_headers().

◆ dict_from_cookiejar()

| dict_from_cookiejar | ( | cj | ) |

Returns a key/value dictionary from a CookieJar. :param cj: CookieJar object to extract cookies from. :rtype: dict

Definition at line 462 of file utils.py.

References i.

◆ dict_to_sequence()

| dict_to_sequence | ( | d | ) |

◆ dotted_netmask()

| dotted_netmask | ( | mask | ) |

Converts mask from /xx format to xxx.xxx.xxx.xxx Example: if mask is 24 function returns 255.255.255.0 :rtype: str

Definition at line 696 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.address_in_network().

◆ extract_zipped_paths()

| extract_zipped_paths | ( | path | ) |

Replace nonexistent paths that look like they refer to a member of a zip archive with the location of an extracted copy of the target, or else just return the provided path unchanged.

Definition at line 263 of file utils.py.

References pip._vendor.requests.utils.atomic_open(), and i.

◆ from_key_val_list()

| from_key_val_list | ( | value | ) |

Take an object and test to see if it can be represented as a

dictionary. Unless it can not be represented as such, return an

OrderedDict, e.g.,

::

>>> from_key_val_list([('key', 'val')])

OrderedDict([('key', 'val')])

>>> from_key_val_list('string')

Traceback (most recent call last):

...

ValueError: cannot encode objects that are not 2-tuples

>>> from_key_val_list({'key': 'val'})

OrderedDict([('key', 'val')])

:rtype: OrderedDict

Definition at line 313 of file utils.py.

References i.

◆ get_auth_from_url()

| get_auth_from_url | ( | url | ) |

Given a url with authentication components, extract them into a tuple of username,password. :rtype: (str,str)

Definition at line 1016 of file utils.py.

References i.

◆ get_encoding_from_headers()

| get_encoding_from_headers | ( | headers | ) |

Returns encodings from given HTTP Header Dict. :param headers: dictionary to extract encoding from. :rtype: str

Definition at line 538 of file utils.py.

References pip._vendor.requests.utils._parse_content_type_header(), and i.

◆ get_encodings_from_content()

| get_encodings_from_content | ( | content | ) |

Returns encodings from given content string. :param content: bytestring to extract encodings from.

Definition at line 488 of file utils.py.

References i.

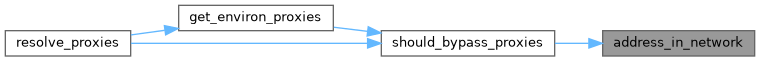

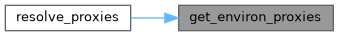

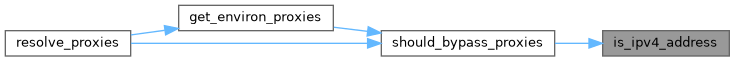

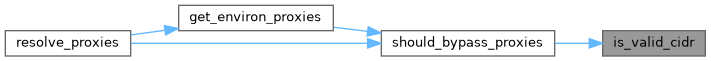

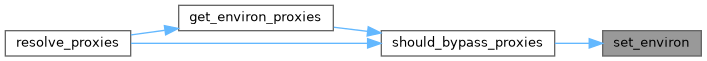

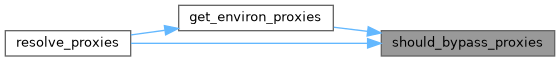

◆ get_environ_proxies()

| get_environ_proxies | ( | url, | |

no_proxy = None |

|||

| ) |

Return a dict of environment proxies. :rtype: dict

Definition at line 824 of file utils.py.

References pip._vendor.requests.utils.should_bypass_proxies().

Referenced by pip._vendor.requests.utils.resolve_proxies().

◆ get_netrc_auth()

| get_netrc_auth | ( | url, | |

raise_errors = False |

|||

| ) |

Returns the Requests tuple auth for a given url from netrc.

Definition at line 199 of file utils.py.

References i.

◆ get_unicode_from_response()

| get_unicode_from_response | ( | r | ) |

Returns the requested content back in unicode. :param r: Response object to get unicode content from. Tried: 1. charset from content-type 2. fall back and replace all unicode characters :rtype: str

Definition at line 590 of file utils.py.

References i.

◆ guess_filename()

| guess_filename | ( | obj | ) |

◆ guess_json_utf()

| guess_json_utf | ( | data | ) |

:rtype: str

Definition at line 955 of file utils.py.

References i.

◆ is_ipv4_address()

| is_ipv4_address | ( | string_ip | ) |

:rtype: bool

Definition at line 707 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.should_bypass_proxies().

◆ is_valid_cidr()

| is_valid_cidr | ( | string_network | ) |

Very simple check of the cidr format in no_proxy variable. :rtype: bool

Definition at line 718 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.should_bypass_proxies().

◆ iter_slices()

| iter_slices | ( | string, | |

| slice_length | |||

| ) |

Iterate over slices of a string.

Definition at line 580 of file utils.py.

References i.

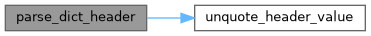

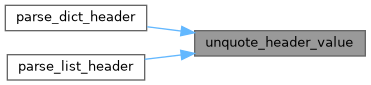

◆ parse_dict_header()

| parse_dict_header | ( | value | ) |

Parse lists of key, value pairs as described by RFC 2068 Section 2 and

convert them into a python dict:

>>> d = parse_dict_header('foo="is a fish", bar="as well"')

>>> type(d) is dict

True

>>> sorted(d.items())

[('bar', 'as well'), ('foo', 'is a fish')]

If there is no value for a key it will be `None`:

>>> parse_dict_header('key_without_value')

{'key_without_value': None}

To create a header from the :class:`dict` again, use the

:func:`dump_header` function.

:param value: a string with a dict header.

:return: :class:`dict`

:rtype: dict

Definition at line 402 of file utils.py.

References i, and pip._vendor.requests.utils.unquote_header_value().

◆ parse_header_links()

| parse_header_links | ( | value | ) |

Return a list of parsed link headers proxies. i.e. Link: <http:/.../front.jpeg>; rel=front; type="image/jpeg",<http://.../back.jpeg>; rel=back;type="image/jpeg" :rtype: list

Definition at line 912 of file utils.py.

References i.

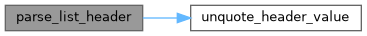

◆ parse_list_header()

| parse_list_header | ( | value | ) |

Parse lists as described by RFC 2068 Section 2.

In particular, parse comma-separated lists where the elements of

the list may include quoted-strings. A quoted-string could

contain a comma. A non-quoted string could have quotes in the

middle. Quotes are removed automatically after parsing.

It basically works like :func:`parse_set_header` just that items

may appear multiple times and case sensitivity is preserved.

The return value is a standard :class:`list`:

>>> parse_list_header('token, "quoted value"')

['token', 'quoted value']

To create a header from the :class:`list` again, use the

:func:`dump_header` function.

:param value: a string with a list header.

:return: :class:`list`

:rtype: list

Definition at line 370 of file utils.py.

References i, and pip._vendor.requests.utils.unquote_header_value().

◆ prepend_scheme_if_needed()

| prepend_scheme_if_needed | ( | url, | |

| new_scheme | |||

| ) |

Given a URL that may or may not have a scheme, prepend the given scheme. Does not replace a present scheme with the one provided as an argument. :rtype: str

Definition at line 987 of file utils.py.

References i.

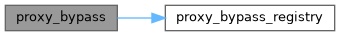

◆ proxy_bypass()

| proxy_bypass | ( | host | ) |

Return True, if the host should be bypassed. Checks proxy settings gathered from the environment, if specified, or the registry.

Definition at line 112 of file utils.py.

References pip._vendor.requests.utils.proxy_bypass_registry().

◆ proxy_bypass_registry()

| proxy_bypass_registry | ( | host | ) |

Definition at line 76 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.proxy_bypass().

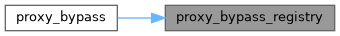

◆ requote_uri()

| requote_uri | ( | uri | ) |

Re-quote the given URI. This function passes the given URI through an unquote/quote cycle to ensure that it is fully and consistently quoted. :rtype: str

Definition at line 659 of file utils.py.

References pip._vendor.requests.utils.unquote_unreserved().

◆ resolve_proxies()

| resolve_proxies | ( | request, | |

| proxies, | |||

trust_env = True |

|||

| ) |

This method takes proxy information from a request and configuration input to resolve a mapping of target proxies. This will consider settings such a NO_PROXY to strip proxy configurations. :param request: Request or PreparedRequest :param proxies: A dictionary of schemes or schemes and hosts to proxy URLs :param trust_env: Boolean declaring whether to trust environment configs :rtype: dict

Definition at line 862 of file utils.py.

References pip._vendor.requests.utils.get_environ_proxies(), i, and pip._vendor.requests.utils.should_bypass_proxies().

◆ rewind_body()

| rewind_body | ( | prepared_request | ) |

Move file pointer back to its recorded starting position so it can be read again on redirect.

Definition at line 1079 of file utils.py.

References i.

◆ select_proxy()

| select_proxy | ( | url, | |

| proxies | |||

| ) |

Select a proxy for the url, if applicable. :param url: The url being for the request :param proxies: A dictionary of schemes or schemes and hosts to proxy URLs

Definition at line 836 of file utils.py.

References i.

◆ set_environ()

| set_environ | ( | env_name, | |

| value | |||

| ) |

Set the environment variable 'env_name' to 'value' Save previous value, yield, and then restore the previous value stored in the environment variable 'env_name'. If 'value' is None, do nothing

Definition at line 743 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.should_bypass_proxies().

◆ should_bypass_proxies()

| should_bypass_proxies | ( | url, | |

| no_proxy | |||

| ) |

Returns whether we should bypass proxies or not. :rtype: bool

Definition at line 764 of file utils.py.

References pip._vendor.requests.utils.address_in_network(), i, pip._vendor.requests.utils.is_ipv4_address(), pip._vendor.requests.utils.is_valid_cidr(), and pip._vendor.requests.utils.set_environ().

Referenced by pip._vendor.requests.utils.get_environ_proxies(), and pip._vendor.requests.utils.resolve_proxies().

◆ stream_decode_response_unicode()

| stream_decode_response_unicode | ( | iterator, | |

| r | |||

| ) |

Stream decodes an iterator.

Definition at line 563 of file utils.py.

References i.

◆ super_len()

| super_len | ( | o | ) |

Definition at line 133 of file utils.py.

References i.

◆ to_key_val_list()

| to_key_val_list | ( | value | ) |

Take an object and test to see if it can be represented as a

dictionary. If it can be, return a list of tuples, e.g.,

::

>>> to_key_val_list([('key', 'val')])

[('key', 'val')]

>>> to_key_val_list({'key': 'val'})

[('key', 'val')]

>>> to_key_val_list('string')

Traceback (most recent call last):

...

ValueError: cannot encode objects that are not 2-tuples

:rtype: list

Definition at line 340 of file utils.py.

References i.

◆ unquote_header_value()

| unquote_header_value | ( | value, | |

is_filename = False |

|||

| ) |

Unquotes a header value. (Reversal of :func:`quote_header_value`). This does not use the real unquoting but what browsers are actually using for quoting. :param value: the header value to unquote. :rtype: str

Definition at line 437 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.parse_dict_header(), and pip._vendor.requests.utils.parse_list_header().

◆ unquote_unreserved()

| unquote_unreserved | ( | uri | ) |

Un-escape any percent-escape sequences in a URI that are unreserved characters. This leaves all reserved, illegal and non-ASCII bytes encoded. :rtype: str

Definition at line 635 of file utils.py.

References i.

Referenced by pip._vendor.requests.utils.requote_uri().

◆ urldefragauth()

| urldefragauth | ( | url | ) |

Given a url remove the fragment and the authentication part. :rtype: str

Definition at line 1062 of file utils.py.

References i.

Variable Documentation

◆ _null

◆ _null2

◆ _null3

◆ DEFAULT_ACCEPT_ENCODING

| str DEFAULT_ACCEPT_ENCODING |